You put a few lines of text into an AI content generator, and in a matter of seconds, you have polished work that is ready to be published. It sounds like magic, doesn’t it? But what if the same magic has a hidden consequence, like your private information leaking out into the air?

We had no idea how much AI-Powered Marketing Tools would change marketing. Just a few years ago, automation meant scheduling posts or running ad optimizations. Now, entire campaigns — from strategy to execution — can be generated, refined, and even managed by AI systems. That’s thrilling for productivity, but terrifying for privacy. Every prompt, file, or dataset you upload might expose something you never intended to share.

In this post, I’ll walk you through how AI copy tools, chatbots, and personalization engines work — where they shine, and where they might betray your trust. You’ll also see how hidden tracking apps sneak under your radar, and how smart use can still make these tools assets — not liabilities.

But here’s the thing — most of us don’t think twice before hitting “generate.” We trust that the systems we use are secure, that our data will quietly vanish once we close the tab. But behind every prompt is a complicated network of servers, third-party integrations, and data models that can store, analyze, or even use what you give them again. It’It’s easy to forget that AI tools for marketers learn from us, and occasionally, they learn too well. The same technology that can make great ads in seconds can also take in trade secrets, consumer information, or personal insights that we never meant to reveal. That’s why knowing where your data goes isn’t just a good idea; it’s essential in the digital marketing world of 2025.

And if you’re already looking into AI tools for marketing and communication, platforms like Phonsee show that new ideas can work alongside solid privacy protections. This shows that security doesn’t have to come at the expense of convenience. At the same time, location-based services like Scannero show that it is possible to track and monitor people online in a responsible way. The idea isn’t to avoid utilizing smart tools; it’s to recognize their limits, know where your data flows, and pick platforms that put security and performance on the same level.

The Promise and The Risk

AI marketing tools are quite tempting. They offer speed, scale, and personalization that human teams can’t always match. Want to spin out ten blog intros or tweak headlines in bulk? No problem. Want hyper-personalized emails tailored to every customer’s behavior? Also possible.

But here’s a jarring stat: 77% of data leakage events in enterprises now trace back to employees pasting sensitive content into personal AI tools — a risky channel many security systems don’t even monitor. What makes this worse is that such leaks often go unnoticed for months. Unlike a clear security breach, these small, cumulative data exposures happen invisibly.

This is the core tension: AI gives you superpowers — but with inadequate guardrails, it hands power to unintended audiences. Marketers, hungry for speed, often forget that AI tools are not private workspaces. They’re shared ecosystems that may log, process, or even train on your inputs.

How AI Copy Tools May Leak Data

When marketers use tools like Jasper, Copy.ai, or write prompts in ChatGPT, they often paste in bits of customer data, brand voice documents, or proprietary lists. Unless the service clearly states it won’t use your inputs for model training, that data may be retained, stored, or even fed back in responses to others.

Think about it: you paste in a few VIP customer testimonials or quotes. The tool may use that text, store it in logs, or incorporate it in training sets. Later, someone else asks a related prompt and gets fragments back. That’s a data bleed.

Worst of all: some AI tools don’t clarify retention or access policies. Combine that with shadow usage (employees using public AI tools outside IT awareness), and you face blind spots.

So what can you do? Always vet the AI service’s privacy terms, disable training on your data when possible, and avoid revealing customer-identifiable facts in prompts. Consider using company-managed AI tools instead of free public ones. These may not be as flexible but are significantly safer.

Also, remember that every copy-paste carries a risk. When you train your team, emphasize that AI isn’t a sandbox — it’s a networked system. What enters the system may never truly disappear.

Chatbots & Personalization Engines: Friend or Foe?

Chatbots and personalization engines (the “you might also like” or dynamic content algorithms) are the frontline of marketing engagement. But they too handle data — from behavioral patterns to profile attributes. If misconfigured, they leak or misuse that data.

A chatbot may log full conversations (including PII) to external servers.

A personalization engine may share segmentation data with ad networks or tracking backends without you fully knowing.

APIs and SDKs embedded in these tools often rely on third parties — that’s opaque data flow territory.

These leaks are stealthy — they don’t always look like “file breach” events. They’re hidden behind APIs, endpoints, and code you didn’t write.

However, chatbots may be useful when utilized correctly. They make customer service better, get information, and increase engagement. The key is to set them up appropriately so that they have the right data access constraints and anonymization rules. Always check what data your bot logs and where it goes. A few easy changes can make the difference between being innovative and being exposed.

Hidden Tracking Apps & Shadow Behavior

Let me zoom in on one of the slipperiest threats: hidden tracking apps and shadow AI usage.

Many apps embed invisible trackers or aggressive SDKs that collect behavior, location, or even sensor data — data you never knowingly agreed to share. These trackers may feed ad networks or data brokers. Layer on top of that, employees or marketers experimenting with AI apps on the side (Shadow AI) — tools not approved by IT or security. That’s a double blind spot.

When used wisely, tracking tools and AI engines can help your business — understanding audiences, improving relevance, optimizing spend. They become risky only when ignored, misconfigured, or wielded without oversight. Transparency with both users and teams is key. Employees must know which AI tools are approved, and customers must know how their data is used. When trust is part of the workflow, compliance becomes easier — and reputation stronger.

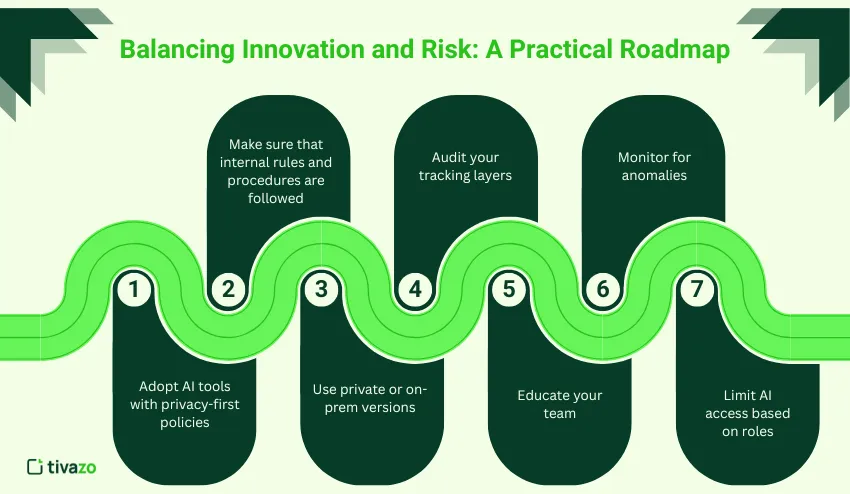

Balancing Innovation and Risk: A Practical Roadmap

You don’t have to choose between “all in” and “do nothing.” Here’s how to get value while managing risk.

- Adopt AI tools with privacy-first policies

- Choose vendors that let you disable training on your data and guarantee data deletion.

- Choose vendors that let you disable training on your data and guarantee data deletion.

- Make sure that internal rules and procedures are followed.

- Set rules for what data can be utilized in AI prompts. Don’t let anybody paste sensitive PII. Monitor AI metrics like copy-paste usage. Use data loss prevention (DLP) tools that catch risky AI interactions.

- Set rules for what data can be utilized in AI prompts. Don’t let anybody paste sensitive PII. Monitor AI metrics like copy-paste usage. Use data loss prevention (DLP) tools that catch risky AI interactions.

- Use private or on-prem versions

- When confidentiality matters, use self-hosted models or closed-system AI instances. This avoids sending data to third-party clouds.

- When confidentiality matters, use self-hosted models or closed-system AI instances. This avoids sending data to third-party clouds.

- Audit your tracking layers

- Regularly review SDKs, third-party code, and scripts for hidden trackers or data exfiltration. Forbes recently called these “dark supply chains,” warning that many marketing apps leak data through invisible dependencies.

- Regularly review SDKs, third-party code, and scripts for hidden trackers or data exfiltration. Forbes recently called these “dark supply chains,” warning that many marketing apps leak data through invisible dependencies.

- Educate your team

- Train marketers on how AI handles data. Remind them: AI is not a blackboard — it records. Encourage safe prompt habits.

- Train marketers on how AI handles data. Remind them: AI is not a blackboard — it records. Encourage safe prompt habits.

- Monitor for anomalies

- Track AI usage patterns. Unexpected spikes or new integrations may signal blindspot tools or shadow usage.

- Track AI usage patterns. Unexpected spikes or new integrations may signal blindspot tools or shadow usage.

- Limit AI access based on roles

- Give employees only the level of AI access they truly need. Restrict sensitive features, confidential data inputs, and administrative controls to minimize internal misuse and reduce accidental data exposure. This helps maintain security without slowing down workflows.

- Give employees only the level of AI access they truly need. Restrict sensitive features, confidential data inputs, and administrative controls to minimize internal misuse and reduce accidental data exposure. This helps maintain security without slowing down workflows.

By following this roadmap, you not only secure your company but also empower your team to innovate responsibly. When employees understand the “why” behind these rules, compliance stops feeling restrictive — it becomes second nature.

Why This Matters

In 2025, AI tools already drive the greatest volume of enterprise data leaks, thanks to unmanaged accounts and careless prompt use. IBM reports 13% of organizations had breaches in AI applications last year — 97% of those lacked proper access controls.

What this means is that most of these incidents are preventable. Data security isn’t just an IT issue anymore — it’s a marketing issue, a brand issue, and a trust issue. Customers are more careful of their privacy than ever before. They recognize when a company doesn’t take their privacy seriously, and they reward companies that do.

AI-powered marketing tools may change the way you work by automating, scaling, and improving workflows, but only if you put safety measures in place. The tools themselves aren’t dangerous. The danger emerges when they work without rules and supervision.

Conclusions

AI-powered marketing tools like text generators, chatbots, and customization engines can change the game when it comes to speed, engagement, and scale. But they also make it easier for data to leak, especially through copy-paste, shadow usage, and stealth SDKs.

You shouldn’t avoid AI as a solution. It’s to use it smartly. Check out vendors, make sure everyone follows the regulations, check the code, teach teams, and keep an eye on how it’s being used. Be careful with these new powers, and treat the data you work with carefully.

At its best, AI is like electricity: strong, unseen, and life-changing. It needs insulation, just like electricity does. When you take the right steps, you receive pure power, not shocks.

By doing so, AI shifts from a liability to a strategic asset — innovation that protects as much as it propels. The future of marketing doesn’t belong to those who use AI recklessly; it belongs to those who use it responsibly.

AI will keep evolving, faster than regulations can catch up. That means marketers must become their own guardians of ethics and privacy, not just rely on compliance checklists. Transparency, accountability, and empathy are the new differentiators. In the end, the most powerful marketing isn’t powered by data alone — it’s powered by trust.