All products are either successful or unsuccessful depending on their ability to satisfy the needs of customers. How to know what customers think in the shortest time? Ask them directly. It is where a product feedback survey comes in.

A product feedback survey is not a collection of questions. It is a device that allows you to know what people like, what annoys them, and what they want to see. When properly implemented, it can help set your map, increase customer satisfaction, and avoid expensive mistakes.

This guide will discuss all the aspects of a product feedback survey such as what a product feedback survey is, how to design, execute, and analyze a survey. There will also be examples, templates, and tools, as well as a working checklist to start your own survey with confidence.

What Is a Product Feedback Survey?

A product feedback survey is a series of questions aimed at taking the opinions, experience and recommendation of the product users.

Product feedback surveys are unlike generic customer satisfaction surveys, but they are directed at how individuals relate with your product, its features, usability, performance, and value.

The essence is this: get the truthful insights, which could be applied to your product and make more effective business decisions.

Example:

To understand whether its new dashboard is easy to use or not, a software company may ask users to rate the ease of use on a scale of 1-5 and give a comment on the subject in an open-ended manner. This immediate feedback identifies the strengths and reveals problems at an early stage.

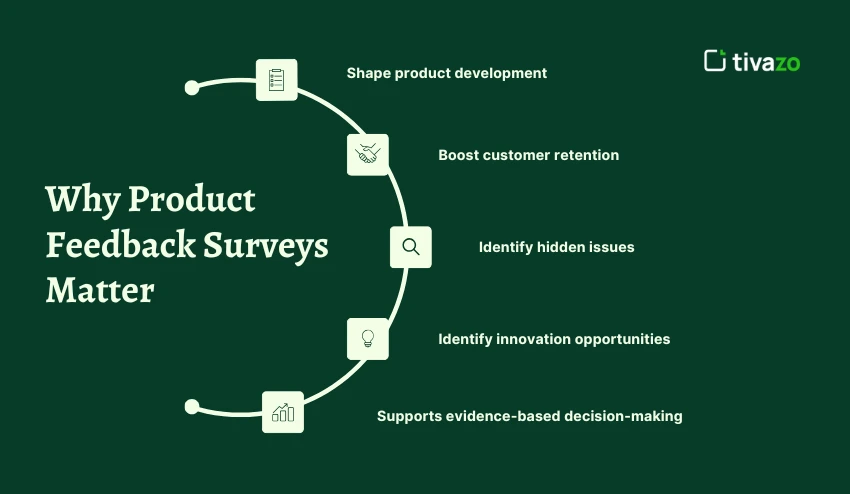

Why Product Feedback Surveys Matter

Gathering feedback is not a waste of time. To the majority of businesses, it is either the difference between guess work and knowing what customers want.

These are the reasons why product feedback surveys are so crucial:

1. Shape product development

Feedback helps to identify what features are the most appreciated by customers and what features should be improved. This will assist in prioritizing your roadmap.

2. Boost customer retention

Users are more likely to remain loyal when they feel that they are heard. Customers are made to believe that their views lead to actual transformation through surveys.

3. Identify hidden issues

The most common survey responses will be bugs, frustration with usability, or workflow confusion.

4. Identify innovation opportunities.

The customers tend to propose new features or applications that you had not thought of.

5. Supports evidence-based decision-making.

Teams are able to operate on quantifiable input instead of assumptions.

Types & Variations of Product Feedback Surveys

Not all product feedback surveys are developed with the same objective. The format and schedule depends on what you would like to capture. The most widespread ones are enumerated below and illustrations of the situations in which each ought to be used:

1. Net Promoter Score (NPS) Surveys

Measures: General loyalty and recommendation.

Key question: How willing are you to suggest our product to a friend or a colleague on a scale between 0 and 10?

- Promoters (9–10): Loyal advocates.

- Passives (78): contented, but not passionate.

- Detractors (0–6): At risk of churn.

Best when: When the trends in the loyalty should be monitored on the long-term and predict the growth.

2. Customer Satisfaction (CSAT) Surveys

Measurement scale: Short term satisfaction of a specific product or experience.

Key question: [What is your satisfaction with [feature/product experience]?

- Typically rated on a 1–5 or 1–7 scale.

- Easy to benchmark over time.

Most valuable: after sales experience, product introduction or customer services.

3. Customer Effort Score (CES) Surveys

Measurement: The difficulty or ease of a customer to perform a task.

Key question: How convenient was it to do your work with our product?

- Hard work = Stress and higher turnover

- Less effort = less difficult to adopt and less difficult to satisfy.

Best when: SaaS is onboarding, checkout or troubleshooting.

4. Feature-Specific Feedback Surveys

Measures: Response of the user to a certain feature or update.

Example questions:

- How important is this feature to you?

- What would you like to see changed with this feature?

Best applicability: When authenticating the implementation of new features or attempting to identify features that are not in use.

5. Post-Purchase Surveys

Measures: Impression right after buying.

Example questions:

- What did you like about this product today?

- Were you satisfied with the checkout process?

Best application: E-commerce stores, subscription subscriptions or major upgrades.

6. In-App Micro-Surveys

Measurement: Impression interaction as the users interact.

- Typically 1–3 questions long.

- Switched on in a situational way (e.g., when one has completed a task).

Example:

How simple was it to export your project now may be a question asked by a design application.

Best use: To get feedback on the experience, reduce recall bias.

7. Long-Form Feedback Surveys

What it examines: Greater feedback of product experience.

- Contained both closed and open ended questions.

- Is more laborious and brings greater knowledge.

When to use: When there are quarterly or annual product reviews and when.

Quick Table: When to Use Each Survey

| Survey Type | Best Timing | Primary Purpose | Example Question |

|---|---|---|---|

| NPS | Every 3–6 months | Loyalty tracking | “How likely are you to recommend us?” |

| CSAT | Immediately after interaction | Short-term satisfaction | “How satisfied are you with this update?” |

| CES | During task completion | Usability check | “How easy was it to complete this action?” |

| Feature Survey | Post-launch | Feature validation | “Is this feature valuable to you?” |

| Post-Purchase | 24–48 hours post-transaction | Buying motivation | “What influenced your purchase?” |

| In-App Micro | Real-time | Contextual feedback | “Was this step easy to complete?” |

| Long-Form | Quarterly/Yearly | Full product review | “What do you like least about our product?” |

How to Design an Effective Product Feedback Survey

Creating a good survey is more than just putting together a few questions. You need a design that invites participants to answer honestly, which leads to actionable data. Here is a step-by-step approach:

Step 1 — Define Clear Goals

Before writing the first question, think: What do I want to find out?

- Are you trying to assess the satisfaction of a new feature?

- Are you trying to get to the bottom of your churn rate?

- Or, do you want to prioritize a feature on your next product release?

Professional tip: Keep your goals contained. Surveys that aim for too many learnings can quickly blur into the fog of stakeholder feedback or dish up nothing but meaningless results.

Example:

A SaaS company launching a new dashboard may have a learning goal, “Understand how easy users find the new layout within the first 30 days.”

Step 2 — Choose the Right Question Types

Using multiple question formats, along with essential tools for conducting market research, can keep a survey engaging and provide more comprehensive data.

- Closed-ended (rating scale, multiple choice): Quick to analyze.

- Open-ended: Lets the user explain in their own words.

- Ranking questions: Great for prioritizing the importance of features.

- Yes/No: Fast and easy; use sparingly.

Professional tip: Put open-ended questions at the end, as users tend to abandon surveys with too many open-ended questions at the beginning. Additionally, users spending more time at the end capture more detailed user feedback.

Step 3 — Keep It Short

Survey fatigue is a genuine problem. Users are significantly more likely to finish Surveys that have fewer than 10 questions.

- Strive for 5–7 essential questions.

- Be aware of your users’ time commitment; it should take less than 3 minutes to complete.

Checklist for short surveys:

- Remove “nice-to-know” questions.

- Do not ask the same general question using different phrasing.

- Test the time to complete your survey yourself.

Step 4 — Use Logic & Branching

Not every question is relevant or applies to every user. Using branching logic shows your user that you care about their experience and improves the relevance of your survey as a whole.

For example:

If a user does not say they have used a particular feature, do not ask them how helpful that feature is. Rather, branch them to a question like: “Why haven’t you tried this feature yet?”

This will help create a better experience for the user while providing higher quality data.

Step 5 — Time It Right

When you ask is just as important as what you ask.

- Post-purchase surveys: Ensure you send them within 24–48 hours of using a feature.

- In-app feedback: Trigger feedback request after the user completes a certain task (you can use this rule of thumb: is feedback needed for that specific task?).

- Feature launch surveys: When a new feature is launched, aim to hear feedback when you believe there is enough data for a more informed user opinion (2–3 week recommendation).

Bad timing creates bad survey data. Do not ask about a feature if the user has not had enough time to use that feature.

Step 6 — Test Before Launch

Always test the survey prior to blind deployment, with a small audience; watch for:

- Confusing statements

- Too many questions

- Technical issues (links, triggers not working)

- Completion time over target

Even testing with five people can surface material issues.

Step 7 — Decide on Distribution Channels

How you deliver the survey matters, because it also affects who responds.

- Email: engaging loyal customers, but risk low response rates.

- In-App: getting immediate reactions from engaged users.

- SMS / Push notifications: terrific if you want quick, simple questions.

- Web embeds: good for getting immediate impressions from site visitors.

Example:

Slack often collects feedback directly in the app itself for immediate impressions while the experience is fresh.

Step 8 — Incentivize (When Necessary)

If your response rates are low, consider participating by providing an incentive.

- Gift certificates

- Discounts

- Early access to new product feature

Important: provide incentives to encourage participation; you do not want to introduce bias. Express it as a thank you, not a bribe.

Step 9 — Ensure Anonymity (Optional but Helpful)

Some customers would not provide honest criticism because they fear being known. Anonymity should increase confidence when replying honestly.

Pro Tip: If you need identifiable respondents (e.g., follow-ups), explain how it will be used above all others.

Step 10 — Close the Loop

The design process doesn’t end when the responses come flooding in- people need to know their responses were worth the time.

- You can share the results publicly or share with the individuals who responded.

- You could also announce any product improvements that are based on survey responses.

For example, one feature that Spotify frequently touts is “You asked, we delivered.” when they introduce a user-requested feature.

Sample Questions & Templates

It is better to have a prepared set of questions to make sure that your product feedback survey is useful and practical. The following are some examples of questions based on the type of survey and a template structure that you can use.

Net Promoter Score (NPS)

- What is the chance that you will recommend our product to a friend or colleague on a scale of 0-10?

- Follow-up (not obligatory): What is your primary reason to score?

Customer Satisfaction (CSAT)

- To what extent are you satisfied with [feature/product experience]? (15 scale)

- Was this feature as expected of you (Yes/No)?

Customer Effort Score (CES)

- How easy did you find your task? (15 scale)

- What made this process hard or easy? (open-ended)

Feature Feedback

- The value of this feature to you (15 scale):

- What is it that you would like to improve about this feature? (open-ended)

- Frequency of use of this feature (multiple-choice: Daily / Weekly / Monthly / Rarely)

After Sales / Customer Service Reviews.

- What was your reason behind buying this product? (multiple-choice)

- How probable do you think you are to use this product again? (1 to 5 scale)

- What was the best thing about your experience? (open-ended)

Example Template Structure

| Question Type | Example Question | Purpose |

|---|---|---|

| Rating Scale | “Rate your satisfaction with the new dashboard.” | Quick quantitative measure |

| Multiple Choice | “Which feature do you use most often?” | Identify top-used features |

| Open-Ended | “What improvements would you like to see?” | Capture qualitative insights |

| Yes/No | “Did the tutorial help you get started?” | Validate onboarding effectiveness |

| Ranking | “Rank these features in order of importance to you.” | Prioritize product development |

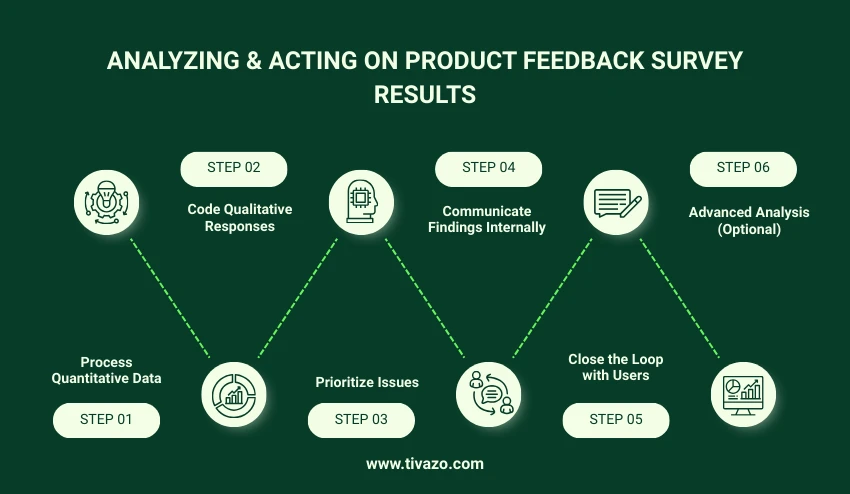

Analyzing & Acting on Product Feedback Survey Results

Gathering feedback on a product survey is not the end. In order to obtain real value, you need to interpret the data and make actionable steps.

Step 1 — Process Quantitative Data

- Your product feedback survey can be rated and multiple choices can be quantified easily.

- Find averages, percentages and time trends.

- Visualize patterns with the help of graphs or charts.

Example:

When 65 percent of users consider a feature 3/5 or less, your product feedback survey identifies a place of weakness that needs to be improved.

Step 2 — Code Qualitative Responses

- Your product feedback survey will provide open-ended responses that are more rich and difficult to analyze.

- Theme or category group feedback.

- Determine typical areas of pain and recommendations.

Hint: NVivo or spreadsheet tagging can be used to sort qualitative data.

Step 3 — Prioritize Issues

A product feedback survey may not have all the feedback that can be acted upon in the immediate future. Use frameworks like:

- Impact vs. Effort Matrix: The changes that have high impact and low effort should be addressed initially.

- Frequency Analysis: Resolve the problems that are raised by the greatest number of users.

Step 4 — Communicate Findings Internally

- Share product feedback survey with product, design and support teams.

- Not only raw data but highlight what can be done.

- Provide representative user quotes to provide context.

Step 5 — Close the Loop with Users

- Make the respondents aware that their feedback about the product survey resulted in improvements.

- Post updates about products through email, in-application messages or newsletters.

Example:

Spotify and Slack frequently update their features with the following message: You requested it, we made it, here is what is new!

Step 6 — Advanced Analysis (Optional)

- Sentiment analysis: Evaluate the percent of times comments are classified as positive, negative, or neutral stemming from the open ended question of your product feedback survey.

- Text analytics/NLP: Gain insight into how often a themed request appears in many thousands of responses.

- Integration to BI tools: Link the findings from the product feedback to dashboards so you can monitor the data over time.

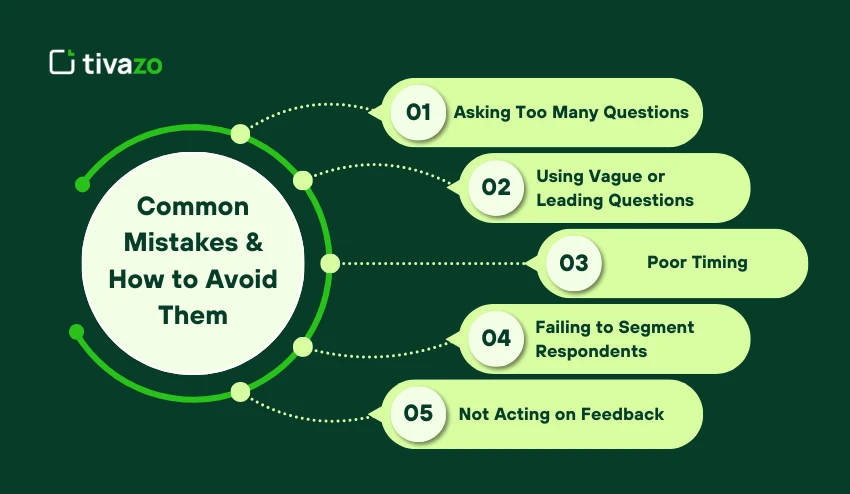

Common Mistakes & How to Avoid Them in Product Feedback Surveys

When creating product feedback surveys, even a good one can fail if you don’t avoid certain scenarios. Knowing what the mistakes are can help make sure your product feedback survey yields actionable information.

1. Asking Too Many Questions

Long surveys are often associated with lower completion rates and rushed responses, which can end in low-quality data. A product feedback survey that focuses should consist of five to ten questions that are central to the goal you want to achieve.

2. Using Vague or Leading Questions

Poorly worded questions are also common mistakes which can confuse respondents and tend to bias their answers. For example, the question “Don’t you think our new feature is useful?” suggests the answer instead of asking for an unclouded opinion. Instead, it is better to ask, “How useful do you think this feature is?” This will allow the product feedback survey to gain honest and actionable insights.

3. Poor Timing

Timing matters! If you send a product feedback survey too early, the respondent may not have enough experience to provide a thorough opinion. If you send the product feedback survey, they may not be able to recall key elements to form an opinion. It is best to send your questionnaires based on the user journey, e.g., post-purchase, after onboarding of your product, or after they have used a specific feature.

4. Failing to Segment Respondents

When you treat all users alike, it erodes your ability to gain rich insights. Every user group will bring their own perspective, and by segmenting your users by factors such as experience, usage frequency, or account type, for example, you will be able to send a product feedback survey that assures you are collecting relevant, usable responses.

5. Not Acting on Feedback

Not making product changes after collecting responses is one of the worst mistakes you can make. Users will expect their feedback to affect product changes, so be sure to analyze your survey data, prioritize any changes, implement them, and communicate back to your survey participants.

A product feedback survey is only valuable after you have done something with the feedback received.

Conclusion

An effective product feedback survey is an effective mechanism for understanding your users, improving your product, and making decisions based on evidenced based research. When you identify clear goals, segment your users, ask the right questions, and thoughtfully analyze the responses, you will develop actionable insights that support meaningful change.

Some common pitfalls to avoid are asking too many questions, asking leading questions, asking questions at an inopportune time, and not taking action based on what you have learned. Using a systematic approach from planning to distribution to analysis to communication will ensure that it provides value.

In the end, systematically collecting and acting on the feedback sent through the survey will help develop stronger relationships with your users, increase satisfaction and create relationship loyalty over time. When done, product feedback survey no longer becomes an item on your to do list, it becomes part of your product strategy to ensure a successful product.